The SDR Manager's Guide to AI-Powered Coaching [2026]

How to scale sales coaching without listening to 50 calls a week. Covers AI call scoring, rep profiling, benchmarking, and training bots that actually develop SDRs.

Article written by

Mavlonbek

You became an SDR manager because you were good at sales. Now you spend your days in spreadsheets, status meetings, and trying to figure out why half your team is hitting quota while the other half is drowning.

The dirty secret of sales management: coaching is the highest-leverage activity you can do, and it's the first thing that gets sacrificed when you're busy.

You know you should be listening to calls. You know you should be doing weekly 1:1s with real feedback. You know you should be identifying what your top performers do differently and replicating it across the team.

But there are only so many hours in the day. And listening to 50 calls a week isn't realistic when you're also forecasting, hiring, running team meetings, fighting fires, and occasionally trying to have a life outside of work.

This is where AI changes the game—not as a gimmick, but as a genuine force multiplier for managers who want to coach at scale without cloning themselves.

This guide breaks down exactly how AI-powered coaching works in 2026, what it can and can't do, and how to implement a system that makes every rep better without burning you out.

Part 1: The Coaching Crisis in Sales Development

Let's start with an uncomfortable truth: most SDR teams are under-coached.

Not because managers don't care. Because the math doesn't work.

The Numbers Problem

Say you manage 8 SDRs. Each rep makes 60-80 dials per day. That's 400-640 calls per day across your team. Even if only 15% connect, you're looking at 60-100 conversations daily.

To properly coach, you'd need to:

Listen to at least 3-5 calls per rep per week (24-40 calls)

Take notes on what went well and what didn't

Identify patterns across multiple calls

Prepare specific feedback for each 1:1

Track improvement over time

Compare against top performers to find gaps

At 3-5 minutes per call (not including note-taking), you're looking at 2-4 hours just listening. Then add analysis time. Then add the 1:1s themselves. Then add the documentation.

You're easily at 8-10 hours per week just on coaching—and that's the bare minimum to be effective. Most managers don't have 8-10 hours. So coaching becomes reactive. You listen to a call when there's a complaint. You give feedback when you happen to overhear something. You do 1:1s that are really just pipeline reviews disguised as development conversations.

The result: reps plateau. Your A-players stay A-players. Your B-players stay B-players. Your C-players eventually churn. And you wonder why the team isn't improving despite all your efforts.

What Good Coaching Actually Requires

Effective sales coaching isn't mysterious. It requires:

Visibility: You need to actually know what's happening on calls

Objectivity: Feedback should be based on evidence, not vibes

Specificity: "Be better at discovery" isn't actionable. "You asked 2 questions in a 4-minute call—aim for 5-6" is.

Consistency: Coaching once a quarter doesn't move the needle

Benchmarking: Reps need to know what "good" looks like

Practice: Feedback without repetition doesn't create skill development

The traditional model fails because it depends entirely on manager bandwidth. When you're stretched thin, visibility drops, feedback becomes vague, consistency disappears, and practice never happens.

AI doesn't replace the manager. It solves the bandwidth problem so the manager can actually do the high-value work: interpreting insights, having meaningful conversations, and making judgment calls about development priorities.

Part 2: The AI Coaching Stack—What's Actually Possible in 2026

Let's get specific about what AI can do for sales coaching today. Not the hype. The reality.

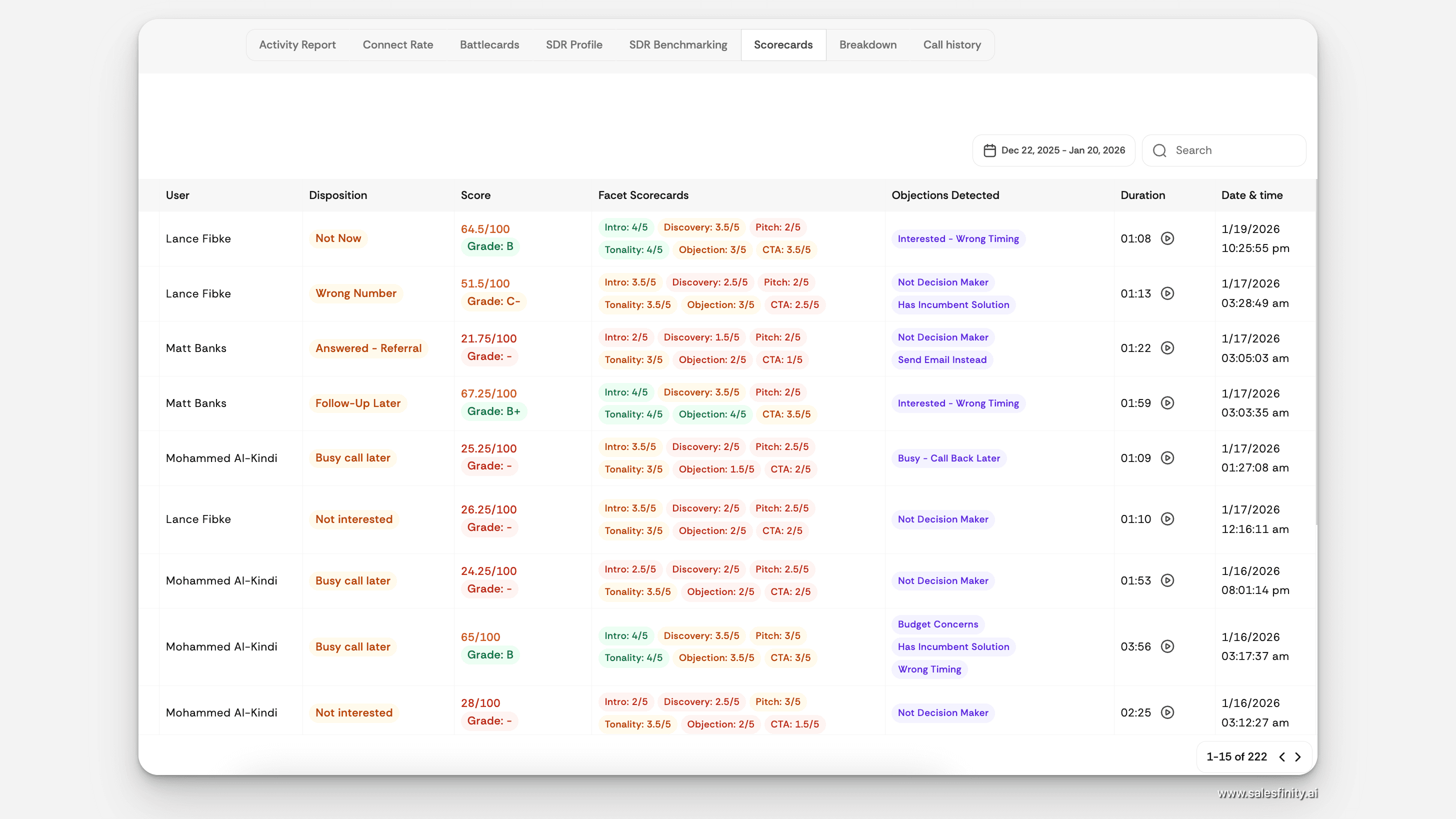

Capability 1: Automatic Call Scoring

The most immediate value of AI coaching is removing the need to manually listen to every call.

Modern AI can analyze recorded sales conversations and score them across multiple dimensions:

Behavioral metrics:

Talk-to-listen ratio (how much is the rep talking vs. the prospect?)

Words per minute (pacing—too fast, too slow, just right?)

Interruptions per call (are they stepping on the prospect?)

Question count and type (discovery depth)

Pitch duration (are they monologuing?)

Skill assessment:

Opener quality (clarity, tone, pattern interrupt effectiveness)

Discovery depth (relevance and progression of questions)

Pitch personalization (did they connect features to stated pain?)

Objection handling (how did they respond under pressure?)

Tonality (confidence, empathy, energy)

Call-to-action clarity (did they actually ask for the meeting?)

Fit analysis:

Did they reach the right persona?

Was the messaging aligned with the prospect's context?

What's the predicted conversion likelihood based on the conversation?

Instead of listening to 40 calls, you look at 40 scorecards. You can sort by lowest scores to find calls that need attention. You can filter by skill area to see who's struggling with objection handling. You can spot patterns in seconds that would take hours to identify manually.

What Salesfinity does here: After every call over 60 seconds, Salesfinity automatically generates a scorecard evaluating the conversation across persona fit, behavioral metrics, and six core skill areas. Every score is backed by AI explanations showing exactly why it was graded that way—with timestamps and transcript evidence. This creates an objective foundation for coaching conversations.

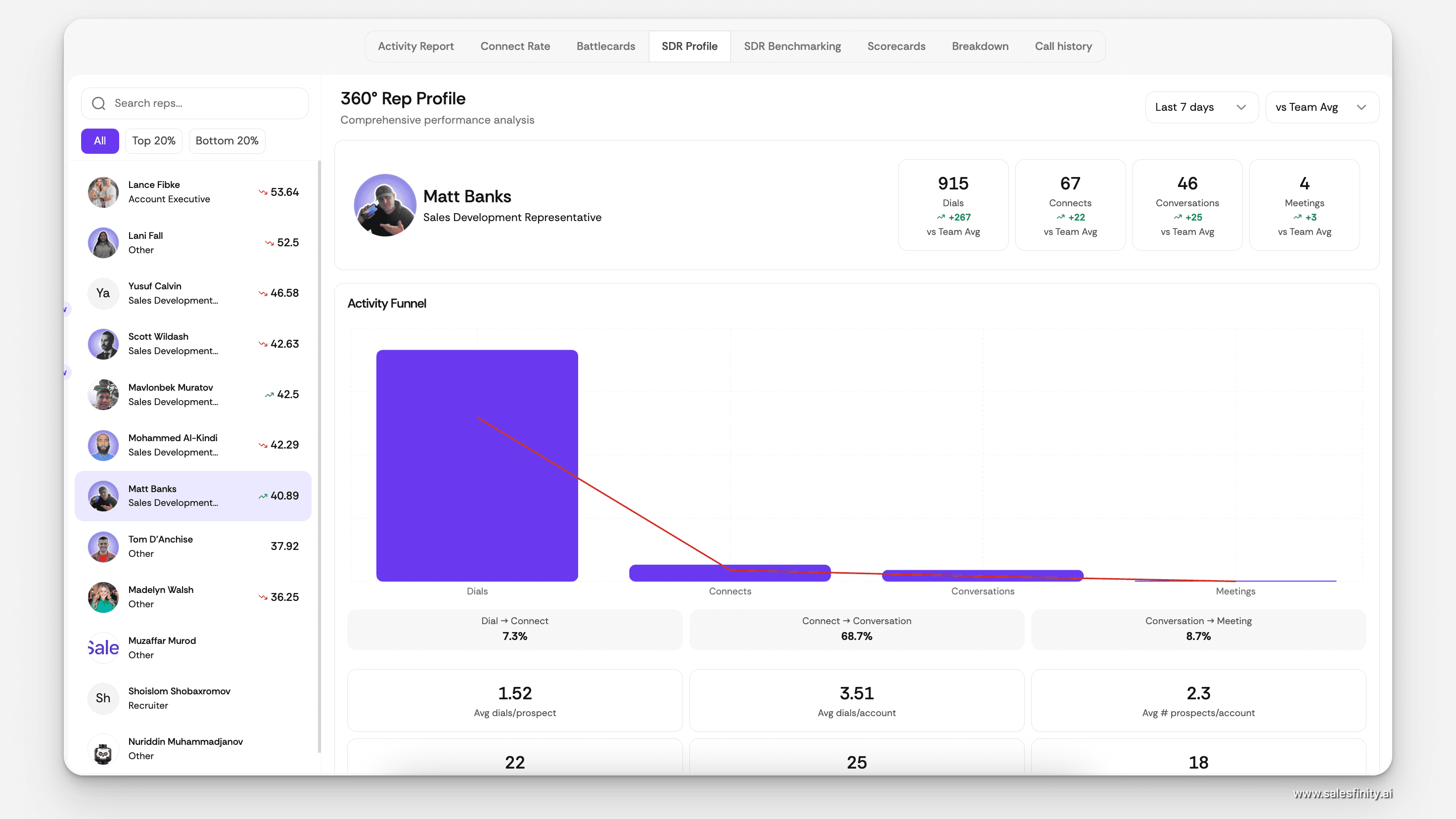

Capability 2: 360-Degree Rep Profiles

Individual call scores are useful. Aggregate profiles are transformational.

When you combine scoring data across dozens or hundreds of calls, you get a complete picture of each rep's strengths and weaknesses:

Activity funnel:

Dials → Connects → Conversations → Meetings

Where is the funnel leaking? Low connects might be a data problem. Low conversation-to-meeting might be a skill problem.

Performance metrics:

Dial efficiency

Connect rate

Meeting rate

Conversion by persona type

Behavioral patterns:

Average talk ratio across all calls

Typical opener length

Pacing tendencies

How often they include a clear CTA

Competency radar:

Visual breakdown across all six skill areas

Easy to see where someone excels vs. struggles

Objection trends:

What objections does this rep encounter most?

How effectively do they handle each type?

Are certain objections consistently derailing them?

Prospect reactions:

Sentiment analysis across calls

How do prospects typically respond to this rep?

This profile becomes the foundation for your 1:1s. Instead of asking "how are things going?" you walk in knowing exactly what's happening. You can say: "I noticed your discovery scores have dropped over the past two weeks—you're averaging 2.3 questions per call when your benchmark was 4.1. What's going on?"

That's a completely different conversation than "I listened to a call yesterday and you didn't ask enough questions."

What Salesfinity does here: The 360° Rep Profile compiles every call, score, and behavior metric into one unified view. Managers can see activity funnels, competency radars, objection trends, and prospect sentiment—all updated automatically. This powers data-backed 1:1 sessions where coaching is objective and actionable.

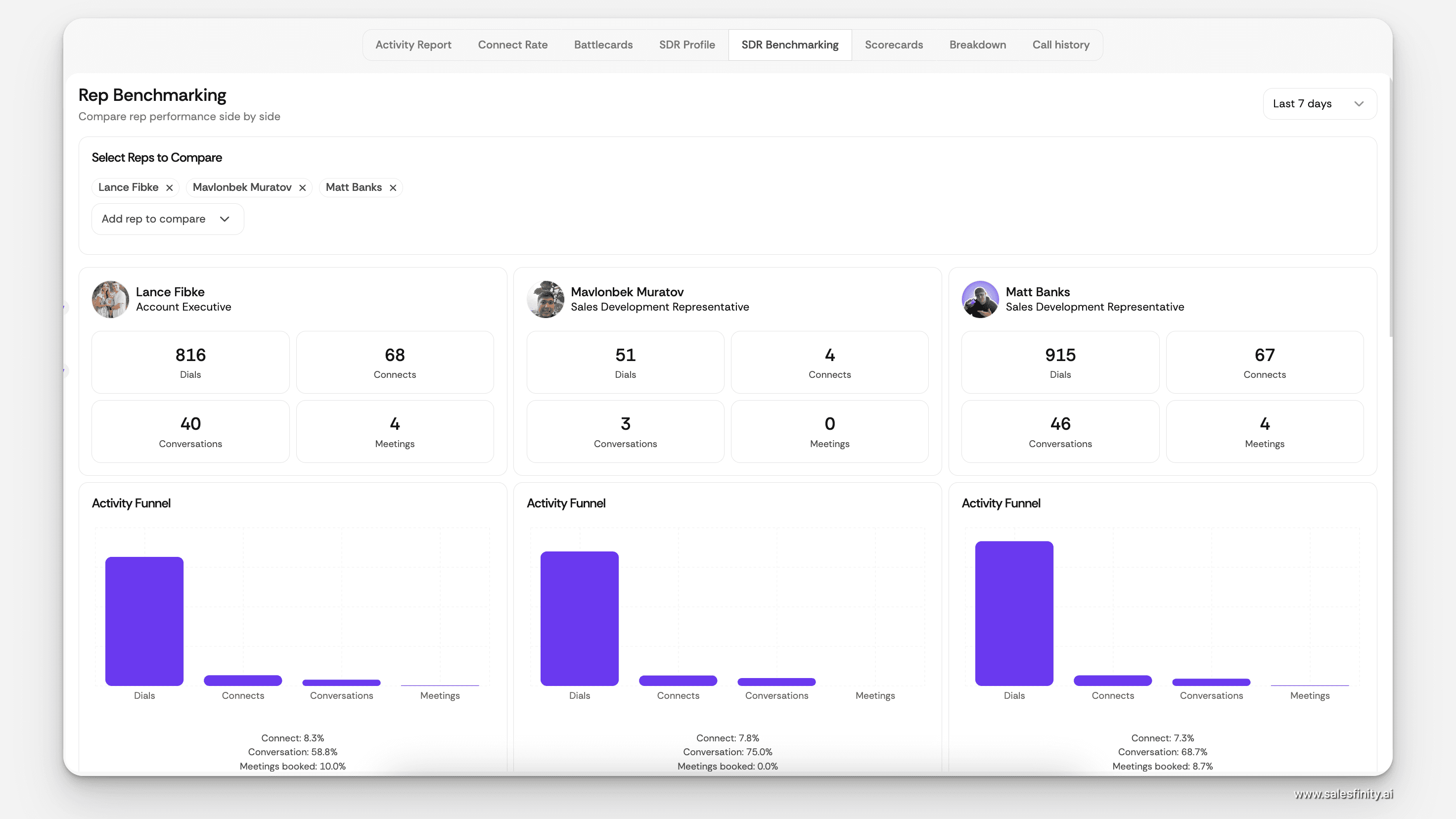

Capability 3: Rep Benchmarking

Here's where AI coaching gets really powerful: comparing reps to each other.

When you can see two (or more) reps side-by-side, patterns emerge that are invisible when looking at individuals in isolation:

Why does Rep A convert 28% of conversations while Rep B converts 12%?

What does Rep A do in the first 30 seconds that Rep B doesn't?

How does Rep A handle the "we're all set" objection compared to Rep B?

What's different about their pacing, question patterns, pitch structure?

Benchmarking answers these questions with data. You're not guessing why one rep outperforms another. You're seeing the specific behavioral and skill differences that drive outcomes.

This has two powerful applications:

For struggling reps: You can identify exactly what they need to change by showing them what high performers do differently. "Sarah converts 3x more meetings than you. Here's the data: she asks twice as many discovery questions, her talk ratio is 15 points lower, and she handles budget objections with this specific framework. Let's work on those three things."

For the whole team: You can identify winning patterns and systematize them. If your top 3 reps all do something specific in their opener, that should become part of your training for everyone.

What Salesfinity does here: The benchmarking feature lets you compare SDRs side-by-side across activity metrics, skill radars, objection handling effectiveness, and prospect sentiment. You can anchor to your best performers and see exactly what they're doing differently—then replicate those patterns across the team.

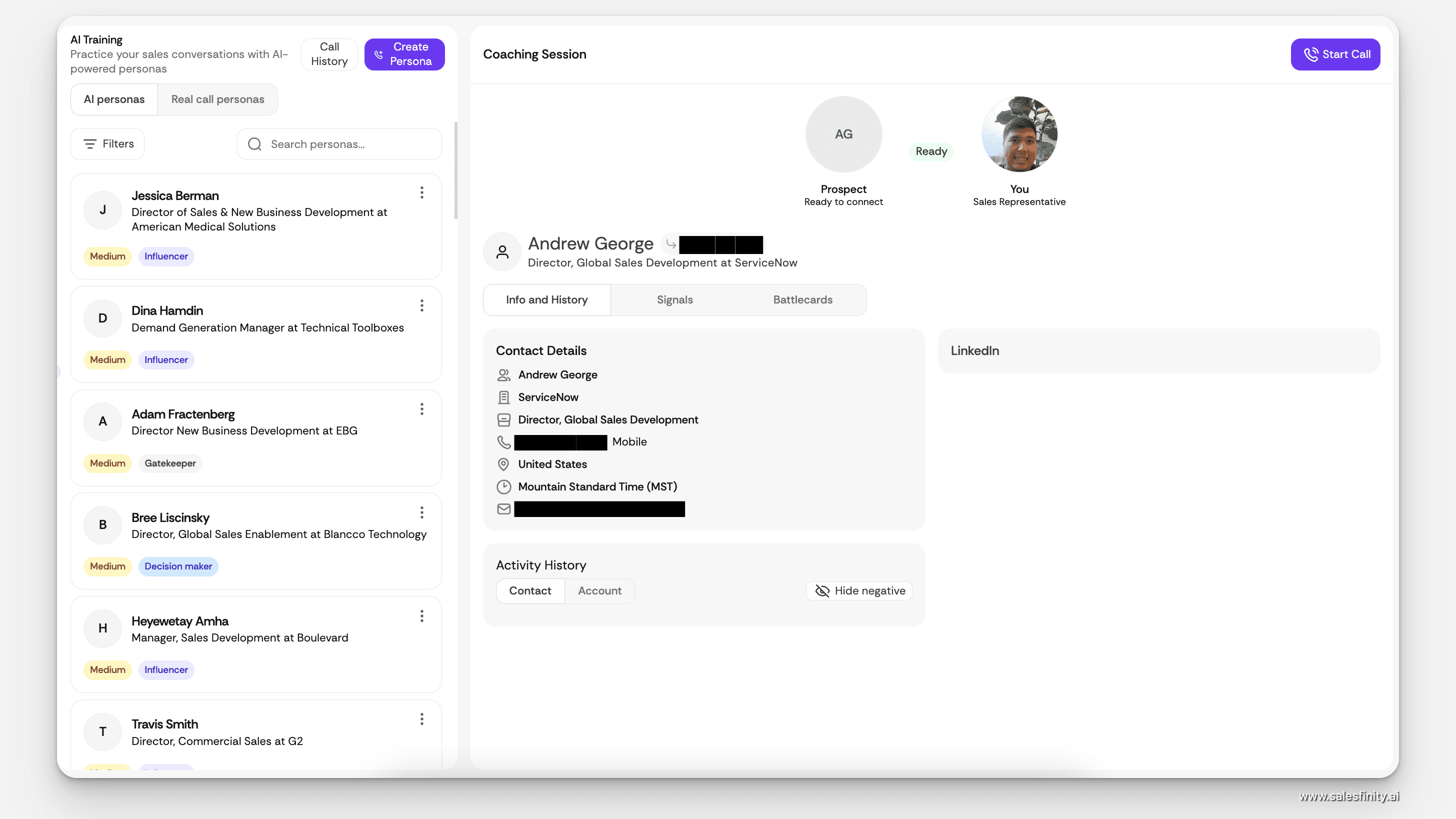

Capability 4: AI Practice Partners

Feedback is necessary but not sufficient. Reps need repetitions to actually build skills.

The problem: you can't have reps practice on real prospects. Every live call is high-stakes. Mistakes cost pipeline.

Traditional solutions—role-playing with peers, practicing with managers—are time-consuming and inconsistent. And they're awkward. Reps know their colleague isn't actually a skeptical CFO.

AI training bots solve this by creating realistic practice environments on demand.

Modern AI can simulate prospects with:

Specific personas (CFO, VP Sales, Director of Marketing)

Configurable personalities (friendly, skeptical, distracted, hostile)

Realistic objections (budget, timing, competition, no interest)

Adjustable difficulty levels

Industry and company context

Reps can practice their opener 20 times in an afternoon. They can drill objection handling until responses become automatic. They can experiment with different approaches without any risk.

The best implementations take this further by connecting to actual call history. Instead of generic personas, the AI generates practice scenarios based on real prospects the rep has called—with similar pain points, objection patterns, and personality traits. They're practicing for conversations they'll actually have.

What Salesfinity does here: AI Training Bots are custom-built virtual prospects that behave like real decision-makers. You can configure everything: title, industry, personality, objection style, difficulty level. But here's the differentiator—Salesfinity connects to your call history and automatically generates clones of people your reps have actually spoken with. So they're not practicing with generic personas; they're practicing with digital avatars of their real prospects. After each practice session, the AI scores the call and suggests specific improvements.

Part 3: The AI Coaching Workflow—Putting It All Together

Tools are useless without a system. Here's how to operationalize AI coaching into your weekly management rhythm.

Daily: The Dashboard Scan (5 minutes)

Start each day with a quick scan of yesterday's activity:

What to look for:

Any calls with unusually low scores (potential coaching moments)

Reps with significant score changes (positive or negative trends)

Meeting conversion rates (is the team booking?)

Connect rate anomalies (data or timing issues?)

This takes 5 minutes and replaces the hour you'd spend trying to piece together activity from CRM reports and random call listening.

Action triggers:

Score below 50 → Flag for review

Score dropped 20+ points vs. average → Check in with rep

High-scoring call → Share with team as example

Weekly: 1:1 Prep and Execution

The 1:1 is where coaching actually happens. AI makes it dramatically more effective.

Before the 1:1 (10 minutes per rep):

Pull up the rep's 360° profile. Review:

Activity metrics: Are they hitting dial/connect/meeting targets?

Score trends: Are skill scores improving, declining, or flat?

Competency gaps: What's their weakest skill area?

Objection patterns: What's tripping them up?

2-3 specific calls: Low-scoring calls you want to discuss

Come in with a clear agenda. You're not fishing for topics—you know exactly what to focus on.

During the 1:1 (30 minutes):

Structure your conversation:

First 5 minutes: Check-in, recent wins, any blockers Next 15 minutes: Skill development focus

Share the data: "Your discovery scores averaged 62 this week, down from 71 last week."

Get context: "What do you think is driving that?"

Listen to a specific call together (you've already identified it)

Collaborative diagnosis: What happened? What would you do differently?

Specific action: "This week, I want you to hit at least 4 discovery questions per call. Track it yourself."

Final 10 minutes: Pipeline review, priorities for the week

After the 1:1 (2 minutes):

Document the focus area and specific commitment. You'll check progress next week.

Weekly: Team Benchmarking Review (30 minutes)

Once a week, look at the team holistically:

Comparative analysis:

Pull up benchmarking view

Who's top performer this week? What's driving it?

Who's struggling? What skills are lagging?

Are there patterns across multiple reps?

Identify coaching priorities:

If 4/8 reps are weak on objection handling, that's a team training need

If 1 rep is lagging across the board, that's an individual intervention

If your top performer has a specific behavior others lack, that's a best practice to share

Team meeting content:

Use high-scoring calls as examples in team meetings

Share specific clips: "Here's how Jordan handled the 'we're all set' objection—notice how she acknowledged, asked a follow-up question, then repositioned."

This is 10x more powerful than generic training because it's your team, your prospects, your context

Monthly: Practice Sessions

Schedule dedicated practice time using AI training bots:

Individual practice (ongoing):

Reps should do 15-20 minutes of AI practice daily

Focus on their specific weak areas identified in scorecards

Track improvement in practice scores over time

Structured team practice (monthly):

Create practice scenarios based on common objections your team faces

Have reps practice the same scenario, then compare approaches

Identify winning techniques and socialize them

New hire onboarding:

AI practice before going live on real calls

Accelerate ramp by getting hundreds of reps before the first real dial

Score practice calls to know when they're ready for live fire

Part 4: The Six Skills AI Coaching Should Measure

Not all call scoring is created equal. The best systems evaluate the skills that actually predict success in cold calling.

Here's the framework:

Skill 1: The Opener (First 30 Seconds)

The opener determines whether you earn the right to continue. AI should evaluate:

Clarity: Did the rep clearly identify themselves and their company?

Pattern interrupt: Did they acknowledge the cold call dynamic?

Permission-based language: Did they ask for a moment rather than launching into a pitch?

Tone: Confident but not aggressive? Warm but not weak?

Pacing: Rushed and nervous, or controlled and calm?

What good looks like: "Hi Sarah, this is James with Acme—you weren't expecting my call. It's actually the first time I've reached you, and I was hoping you could help me out for a brief moment."

What bad looks like: "Hi, uh, this is James calling from Acme Solutions, how are you doing today? Great, great. So the reason for my call is..."

Skill 2: Discovery (Questions and Listening)

Cold calls aren't about pitching—they're about qualifying and generating interest through dialogue.

Question count: How many questions did the rep ask?

Question quality: Open-ended vs. closed? Relevant to persona?

Progression: Did questions build on each other logically?

Talk ratio: What percentage of the call was the rep talking vs. listening?

Active listening signals: Did they acknowledge and build on what the prospect said?

Benchmark: Strong cold callers ask 4-6 questions in a 3-5 minute conversation with a talk ratio under 45%.

Skill 3: The Pitch (Value Communication)

When reps do pitch, it should be concise and relevant.

Brevity: Was the pitch under 30 seconds?

Problem-outcome structure: Did they lead with pain, not features?

Personalization: Did the pitch connect to anything the prospect said?

Social proof: Did they mention relevant customers or results?

Clarity: Could a 5th grader understand what they sell?

What good looks like: "We help outbound teams whose reps are burning hours dialing and hitting voicemails. Salesfinity gives them 20-30 live connects a day, so they build more pipeline with fewer dials."

What bad looks like: "So Acme is a comprehensive sales engagement platform that leverages AI-powered automation to streamline multichannel outreach sequences with intelligent cadence optimization..."

Skill 4: Objection Handling (Responding Under Pressure)

Every cold call hits resistance. How reps respond separates good from great.

Composure: Did they stay calm or get defensive?

Acknowledgment: Did they validate the objection before responding?

Curiosity: Did they ask follow-up questions to understand the objection?

Reframe: Did they effectively address the concern?

Persistence: Did they give up too easily or push too hard?

Common objections to track:

"Not interested" / "We're all set"

"Send me an email"

"Now isn't a good time"

"We already have a solution"

"I'm not the right person"

"What's this about?" (gatekeeper)

Skill 5: Tonality (How They Sound)

The same words delivered differently produce completely different outcomes.

Confidence: Do they sound like they believe in what they're saying?

Energy: Appropriate enthusiasm without desperation?

Pacing: Words per minute—too fast signals nerves, too slow loses attention

Warmth: Do they sound like someone you'd want to talk to?

Control: Are they leading the conversation or being led?

Tonality is hard to coach through text feedback. AI that can identify tonal issues helps reps understand something they can't see in a transcript.

Skill 6: Call-to-Action (Closing for Next Steps)

Every call should end with a clear ask.

Specificity: Did they propose specific times? ("Tuesday at 10 or Wednesday at 2")

Soft close language: Did they reduce pressure? ("Would you be opposed to...")

Outcome-focused: Did they tie the meeting to a benefit, not just the meeting itself?

Persistence: If the first ask was declined, did they pivot appropriately?

What good looks like: "Would you be opposed to carving out 15 minutes next Tuesday at 10 to see if we could cut your team's dialing time in half?"

What bad looks like: "So yeah, would you want to, like, maybe hop on a call sometime to learn more about what we do?"

Part 5: Running Data-Driven 1:1s

The 1:1 is where coaching converts to skill development. Here's how to run them effectively with AI data.

The Old Way vs. The New Way

Old way:

Manager: "How's it going?"

Rep: "Good, pretty good week."

Manager: "How are your numbers looking?"

Rep: "I booked 3 meetings."

Manager: "Nice. Anything I can help with?"

Rep: "Nope, all good."

(30 minutes of wasted time)

New way:

Manager: "I looked at your scorecard data. Your opener scores jumped from 64 to 78 this week—great progress. But your discovery scores dropped from 71 to 58. You're averaging 2 questions per call, down from 4. What's going on?"

Rep: "Honestly, I've been rushing to get through my list. I feel pressure to hit dial numbers."

Manager: "That's a trap. Let's look at this call from Tuesday..."

(Actual coaching happens)

The 1:1 Prep Checklist

Before every 1:1, pull this data:

Activity snapshot:

Dials this week vs. target

Connect rate vs. team average

Conversations vs. target

Meetings booked vs. target

Skill scores (week-over-week):

Opener: __ → __

Discovery: __ → __

Pitch: __ → __

Objection handling: __ → __

Tonality: __ → __

CTA: __ → __

Patterns:

Most common objections encountered

Objection handling success rate

Prospect sentiment trends

Calls to discuss:

1 low-scoring call (coaching opportunity)

1 high-scoring call (reinforce what's working)

The 1:1 Conversation Framework

Step 1: Acknowledge progress (2 minutes)

Start positive. Identify something that improved, even if small. "Your CTA scores are up 12 points—you're asking for meetings more clearly. I can see the work you're putting in."

Step 2: Present the focus area (3 minutes)

Be direct about what needs work. Use data, not opinion. "Here's what I'm seeing in the numbers: your discovery is the gap right now. You're at 58 this week, which is 15 points below your average and 22 points below top performer benchmark."

Step 3: Listen to their perspective (5 minutes)

Don't assume you know why. "What do you think is driving that?" Often reps have context you don't. Maybe they're dealing with a tough list segment. Maybe they're experimenting with a new approach. Maybe they're just rushing.

Step 4: Review a specific call together (10 minutes)

Abstract feedback doesn't stick. Specific feedback does.

Pull up a call you've pre-selected. Listen to the relevant section together. Pause at key moments:

"Right here, after they said they're not interested—what options did you have?"

"Notice how short that discovery phase was. Where could you have gone deeper?"

"What question could you have asked here instead of pivoting to the pitch?"

Let them diagnose before you prescribe. Self-identified insights stick better than imposed ones.

Step 5: Set a specific commitment (5 minutes)

End with something concrete and measurable:

"This week, I want you to hit at least 4 discovery questions per call. Track it yourself, and we'll look at the data next week."

"Practice objection handling for 15 minutes every morning using the AI training bot. Focus on 'we're all set' since that's tripping you up most."

"Before each call, write down one question you want to ask about their specific situation. Make discovery intentional."

Step 6: Quick pipeline check (5 minutes)

Don't skip the business. Review hot opportunities, stuck deals, priorities for the week. But keep it brief—this isn't the main event.

Following Up Between 1:1s

Coaching doesn't end when the meeting ends.

Mid-week check-in: Quick Slack message. "How's the discovery focus going? Seeing any changes?"

Share examples: When you see a call that exemplifies what you discussed, send it. "This is exactly what I'm talking about—nice work."

Public recognition: When scores improve, call it out in team settings. "Jordan's discovery scores jumped 18 points this week. She's been intentional about asking more questions before pitching."

Part 6: Benchmarking and Best Practice Extraction

Individual coaching is necessary. Team-wide improvement requires systematic benchmarking.

How to Run a Benchmarking Analysis

Step 1: Identify your top performers

Not just by meeting output—by efficiency metrics:

Highest conversation-to-meeting conversion

Highest average call scores

Best objection handling success rates

Step 2: Compare against the middle of the pack

Pull up side-by-side comparisons. Look for differences in:

Talk ratio (top performers usually talk less)

Question count (top performers usually ask more)

Opener scores (top performers hook faster)

Objection patterns (top performers handle better or face fewer because they qualify better)

Step 3: Identify specific behavioral differences

Get granular. It's not enough to know "Sarah is better at discovery." You need to know:

How many questions does Sarah ask vs. average?

What types of questions does Sarah ask?

When in the call does Sarah ask them?

How does Sarah transition from discovery to pitch?

Step 4: Extract teachable patterns

Turn findings into frameworks your whole team can use:

"Top performers ask 5-6 questions before pitching. Average performers ask 2-3."

"Top performers use this specific language to handle 'we're all set': acknowledge → curiosity question → reframe."

"Top performers spend the first 45 seconds purely on rapport and permission. Average performers pitch within 20 seconds."

Step 5: Systematize through training

Once you've identified winning patterns:

Document them in your playbook

Create AI training scenarios that drill them

Set scorecard benchmarks that reflect them

Review progress in team meetings

The Monthly Benchmarking Review

Block 2 hours monthly for deep benchmarking analysis:

Hour 1: Data review

Pull team-wide metrics

Identify top/bottom performers

Run side-by-side comparisons

Document key findings

Hour 2: Action planning

What patterns should become team standards?

Who needs individual intervention?

What training content should you create?

What should you cover in the next team meeting?

Part 7: AI Practice—Building Skills Through Repetition

Feedback without practice is theater. Reps need repetitions to convert knowledge into skill.

Why Traditional Practice Falls Short

Role-playing with peers:

Awkward—everyone knows it's not real

Inconsistent—peer quality varies

Time-consuming—takes two people's schedules

Limited—you can only practice so many scenarios

Role-playing with managers:

Intimidating—reps hold back

Expensive—manager time is scarce

Infrequent—maybe monthly if you're lucky

Live calls:

High-stakes—mistakes cost pipeline

Uncontrolled—you can't repeat scenarios

No feedback loop—you don't know what worked until the meeting shows (or doesn't)

How AI Practice Changes the Game

AI training bots let reps practice on demand with:

Realistic simulation:

Prospects that sound and respond like real people

Objections delivered naturally in conversation

Personality types that challenge in different ways

Context-aware responses that adapt to what the rep says

Controlled difficulty:

Easy mode for new reps building confidence

Medium mode for skill refinement

Hard mode for stress-testing high performers

Adjustable objection frequency and intensity

Immediate feedback:

Call scored against the same rubric as real calls

Specific feedback on what worked and didn't

Improvement suggestions for next attempt

Progress tracking over time

Unlimited repetitions:

Practice the opener 50 times until it's automatic

Drill budget objection handling until the response is instinctive

Experiment with different approaches without consequence

The Salesfinity Difference: Practicing with Your Real Prospects

Generic personas are okay. Prospect clones are better.

Salesfinity's AI training bots connect to your call history and generate practice scenarios based on real people your reps have called. If a rep struggled with a skeptical CFO last week, they can practice with an AI version of that exact persona—same industry, similar pain points, comparable objection style.

This isn't practicing for hypothetical conversations. It's practicing for conversations that will actually happen when they call that account again or similar accounts tomorrow.

After each practice call, the AI scores the session and provides specific improvement suggestions: "Your opener was strong, but you only asked 2 discovery questions. Try asking about their current process before jumping to your pitch."

Building a Practice Culture

Practice doesn't happen unless you make it part of the routine.

Daily individual practice:

10-15 minutes at the start of each day

Focus on the skill area identified in their scorecard

Track practice scores alongside real call scores

Weekly team practice:

30 minutes in team meeting

Everyone practices the same scenario

Share and compare approaches

Identify best techniques and socialize them

New hire ramp:

2-3 hours of practice daily during first two weeks

Graduate to live calls only after hitting practice benchmarks

Dramatically accelerates time to productivity

Pre-call practice:

Before important calls, practice the specific scenario

Especially valuable for callbacks and follow-ups with known objections

Part 8: Implementing AI Coaching—A 30/60/90 Day Plan

Days 1-30: Foundation

Week 1: Tool setup and baseline

Deploy your AI coaching platform (Salesfinity AI Coaching integrates with your existing dialer)

Start scoring all calls automatically

Establish baseline metrics for each rep

Don't make any changes yet—just observe

Week 2: Manager enablement

Learn to read scorecards quickly

Practice pulling rep profiles

Run your first benchmarking comparison

Identify obvious patterns and outliers

Week 3: Introduce to the team

Explain what the system does and why

Emphasize: this is for development, not surveillance

Show them their own profiles—transparency builds trust

Set expectations: scores will be part of 1:1s going forward

Week 4: First AI-powered 1:1s

Prep using the new data

Run your first data-driven coaching conversations

Gather feedback: What's useful? What's confusing?

Refine your approach

Goal by Day 30: All calls are being scored. All 1:1s are data-driven. You have baseline metrics for every rep.

Days 31-60: Activation

Week 5-6: Launch AI practice

Set up AI training bots with your real prospect personas

Assign daily practice to struggling reps

Create team-wide practice scenarios

Track practice engagement alongside real call scores

Week 7-8: Benchmarking and standards

Complete your first deep benchmarking analysis

Identify 3-5 winning patterns from top performers

Document them as team standards

Introduce benchmarks into scorecard expectations

Goal by Day 60: Practice is part of the routine. Team standards are documented. Reps know what "good" looks like.

Days 61-90: Optimization

Week 9-10: Measure improvement

Compare current metrics to Day 1 baselines

Identify reps who've improved most (recognize them)

Identify reps who haven't improved (intervene)

Document what's working in your coaching approach

Week 11-12: Scale and systematize

Create onboarding playbook using AI coaching data

Build library of best-practice call clips

Establish monthly benchmarking cadence

Train team leads (if applicable) on the methodology

Goal by Day 90: Measurable improvement in team call quality scores. Documented coaching system. Self-sustaining process.

Part 9: Metrics That Prove AI Coaching ROI

You need to justify the investment. Here's what to track.

Leading Indicators (Measure Weekly)

Average call quality score

What it tells you: Overall skill level of the team

Target: Improve 10-15% in first 90 days

Skill-specific scores

What it tells you: Where skills are developing (or not)

Target: No rep below 60 in any category

Practice engagement

What it tells you: Whether reps are using development tools

Target: 15+ minutes of AI practice per rep per day

Score variance

What it tells you: Consistency across the team

Target: Reduce gap between top and bottom performers by 20%

Lagging Indicators (Measure Monthly)

Conversation-to-meeting rate

What it tells you: Whether better calls produce better outcomes

Target: 15-25% improvement in 90 days

Meeting quality (show rate, advancement)

What it tells you: Whether better calls produce better meetings

Target: Show rate above 80%

Ramp time for new hires

What it tells you: Whether AI coaching accelerates onboarding

Target: Reduce ramp to productivity by 30%

Rep retention

What it tells you: Whether development improves satisfaction

Target: Reduce churn by developing B-players instead of replacing them

The Business Case Math

Let's say AI coaching improves your team's conversation-to-meeting rate by 20%.

Current state:

8 reps

20 conversations per rep per week

15% conversion rate

= 24 meetings per week

With AI coaching:

Same conversations

18% conversion rate (20% improvement)

= 28.8 meetings per week

That's ~5 additional meetings per week. At 50 weeks per year = 250 additional meetings.

If your average opportunity value is $20,000 and your meeting-to-close rate is 10%, that's:

250 meetings × 10% close rate × $20,000 = $500,000 in additional pipeline

Even a fraction of that justifies the investment in coaching tools.

Part 10: Common Mistakes to Avoid

Mistake 1: Using Scores as Punishment

The fastest way to kill adoption is using AI coaching data to punish reps.

If reps feel like they're being surveilled and scores will be used against them, they'll find ways to game the system or simply disengage.

Fix: Frame scores as developmental data, not performance reviews. Celebrate improvement. Use low scores as coaching opportunities, not write-up evidence.

Mistake 2: Overwhelming with Data

AI coaching generates a lot of data. Most of it isn't actionable for reps.

If you dump a 47-metric scorecard on a rep, they'll shut down. Focus creates progress.

Fix: Each 1:1, focus on ONE skill area. One metric to move. One specific behavior to practice. Depth over breadth.

Mistake 3: Skipping the Qualitative

Data tells you what's happening. Conversations tell you why.

Don't stop listening to calls entirely. Don't skip the discussion in 1:1s. The AI gives you efficiency, not replacement.

Fix: Use data to identify which calls to listen to, then actually listen. Use scores to guide conversations, then actually have the conversation.

Mistake 4: Ignoring Top Performers

Most coaching energy goes to struggling reps. That's logical but incomplete.

Top performers have wisdom to share. And even top performers have room to grow.

Fix: Spend benchmarking time understanding what top performers do. Challenge them to keep improving. Use them as peer coaches and examples.

Mistake 5: Setting It and Forgetting It

AI coaching isn't a one-time setup. Personas need updating. Benchmarks need refreshing. New objections emerge that need practice scenarios.

Fix: Schedule monthly reviews of your AI coaching setup. Are training bots still realistic? Are scorecards measuring what matters? Is the team actually using the tools?

Part 11: What AI Can't Do (And What Only You Can Do)

Let's be clear about limitations.

AI Can't Build Trust

The relationship between manager and rep matters. AI can inform that relationship, but it can't replace the human element of showing you care about their development.

AI Can't Make Judgment Calls

Should this rep be on a PIP or given more time? Is this skill gap trainable or a fundamental fit issue? Is the team's targeting off, or is the market just hard right now? These require human judgment.

AI Can't Motivate

Data can show progress, which is motivating. But real motivation comes from feeling valued, having purpose, and believing in the vision. That's your job.

AI Can't Coach Context

A scorecard might flag a call as low quality, but you have context the AI doesn't. Maybe the prospect was abusive. Maybe the rep was testing a new approach you asked them to try. Human oversight catches what algorithms miss.

What Only You Can Do

Make people feel seen and valued

Decide what matters most for each individual

Navigate organizational politics and priorities

Build team culture and camaraderie

Exercise judgment when data is ambiguous

Model what great looks like through your own behavior

AI is leverage. You're still the leader.

Conclusion: The New Manager Playbook

Let's bring it all together.

The old model of SDR management was brute force: listen to as many calls as you could, give feedback when you caught something, hope your intuition was right about what needed to improve.

The new model is systematic leverage:

AI scores every call so you don't have to listen to all of them

360° profiles show you exactly where each rep stands

Benchmarking reveals what top performers do differently

AI practice lets reps build skills through repetition

Data-driven 1:1s make every coaching conversation count

Tracked metrics prove progress over time

You still do the coaching. You still make the judgment calls. You still build the relationships. But now you have superpowers.

The SDR managers who adopt this model will develop better reps faster, hit quota more consistently, and build teams that actually grow over time.

The ones who don't will keep wondering why their team isn't improving.

The tools exist. The playbook is here. The only question is whether you're going to use them.

Quick Reference: AI Coaching Checklist

Daily (5 minutes)

[ ] Scan yesterday's call scores

[ ] Flag any calls below threshold for review

[ ] Spot-check rep activity levels

Weekly (per rep)

[ ] Pull 360° profile before 1:1

[ ] Identify focus skill area

[ ] Select 1-2 calls to review together

[ ] Run data-driven 1:1 (30 min)

[ ] Document commitment and follow up

Weekly (team)

[ ] Review team-wide metrics

[ ] Identify top performer patterns

[ ] Share best-practice examples in team meeting

[ ] Run team practice session (30 min)

Monthly

[ ] Complete deep benchmarking analysis

[ ] Update team standards based on findings

[ ] Review and update AI training personas

[ ] Measure improvement vs. baseline

[ ] Plan coaching priorities for next month

Ready to transform how your team develops? Salesfinity AI Coaching gives you automatic call scoring, 360° rep profiles, benchmarking, and AI training bots—everything you need to coach at scale without listening to 50 calls a week. See why SDR managers at fast-growing SaaS teams trust Salesfinity to develop their reps faster.

Related Resources:

Article written by

Mavlonbek

Make 100 cold calls before 10AM coffee break